Databricks: Excessive Storage Usage

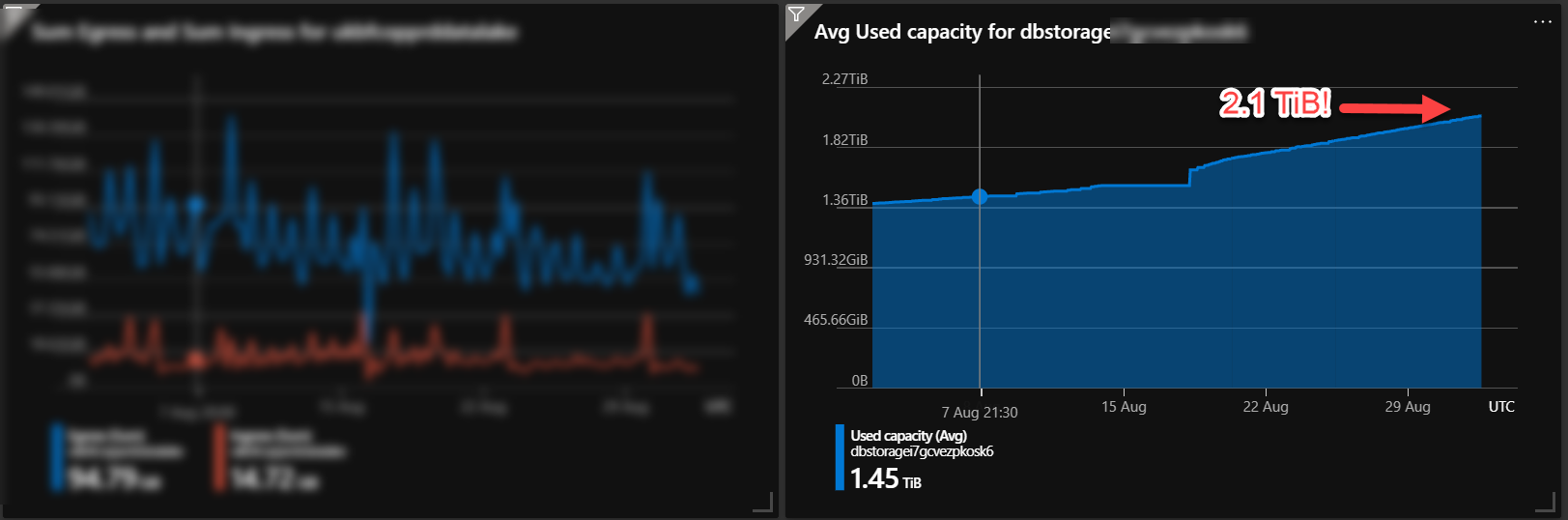

I was looking at one of workspaces I’m looking after in MS Azure, and wow - databricks storage is growing steadily, and has reached 2.1 TiB!!!. I am not storing any data in DBFS at all, everything is in external tables, so it’s a bit unclear what is going on.

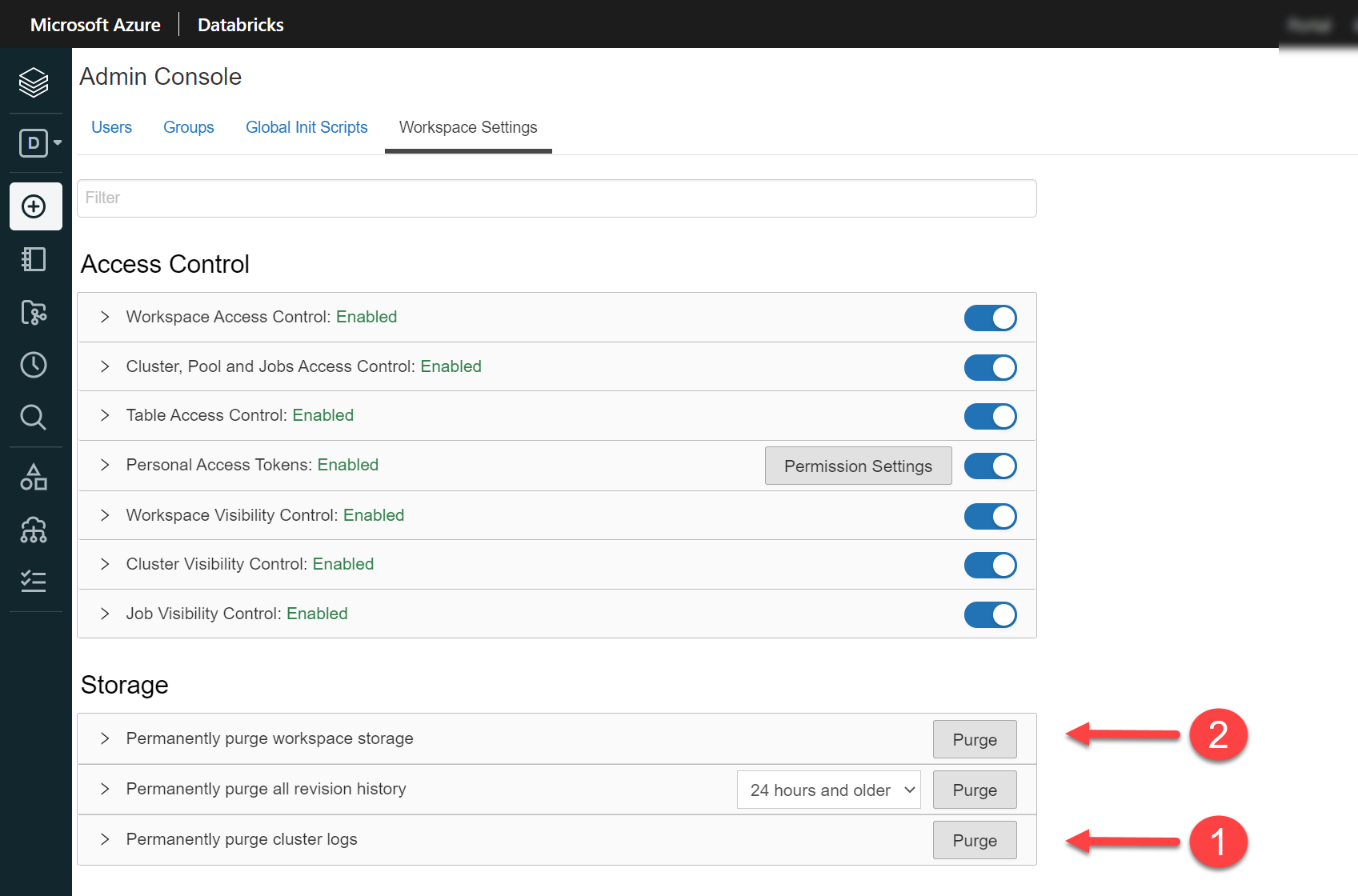

Short story, you can significantly drop this down by purging cluster logs and workspace storage from the workspace itself:

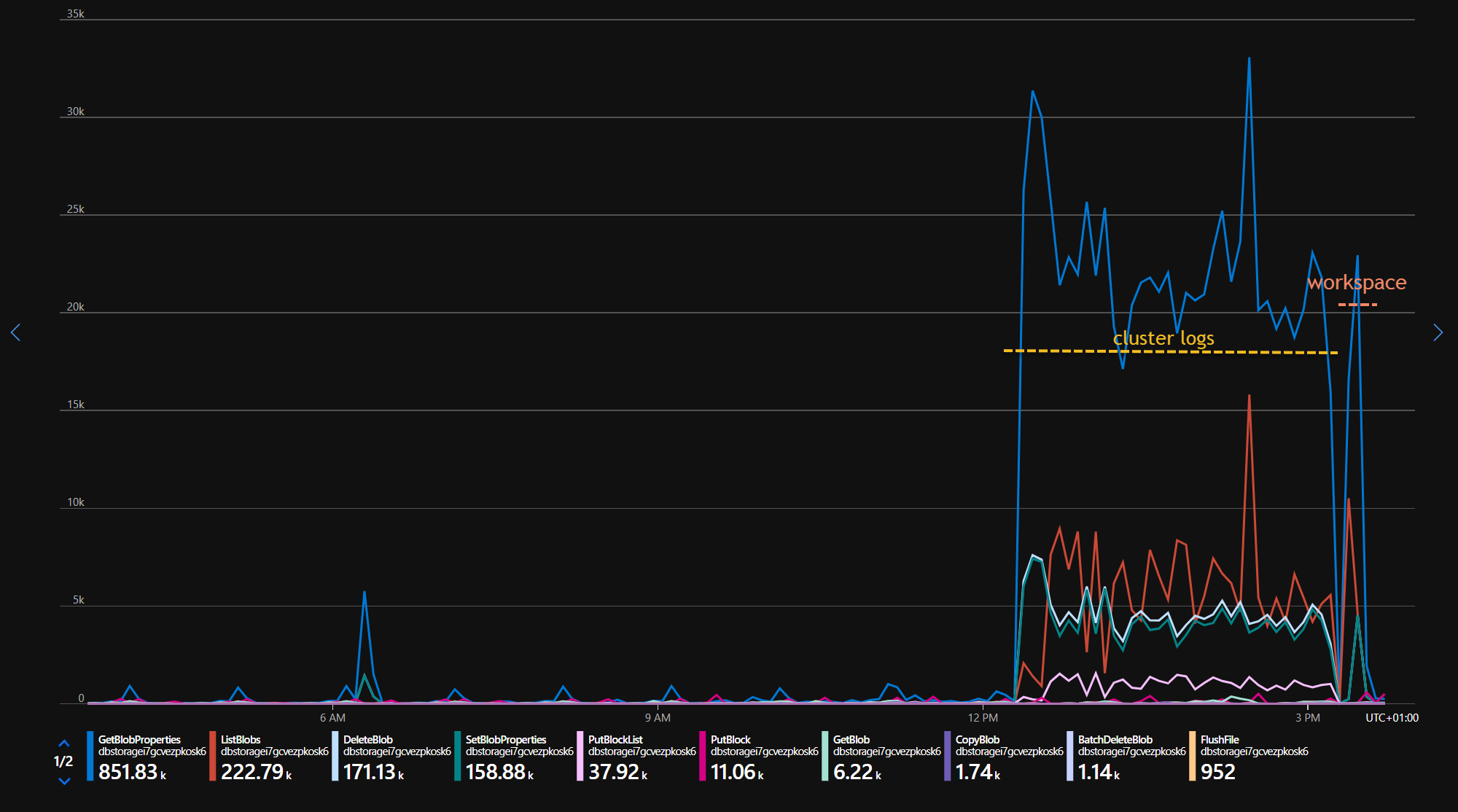

When I ran purge, API calls to storage suddently jumped up, for both processes. Cluster logs were running for much longer though:

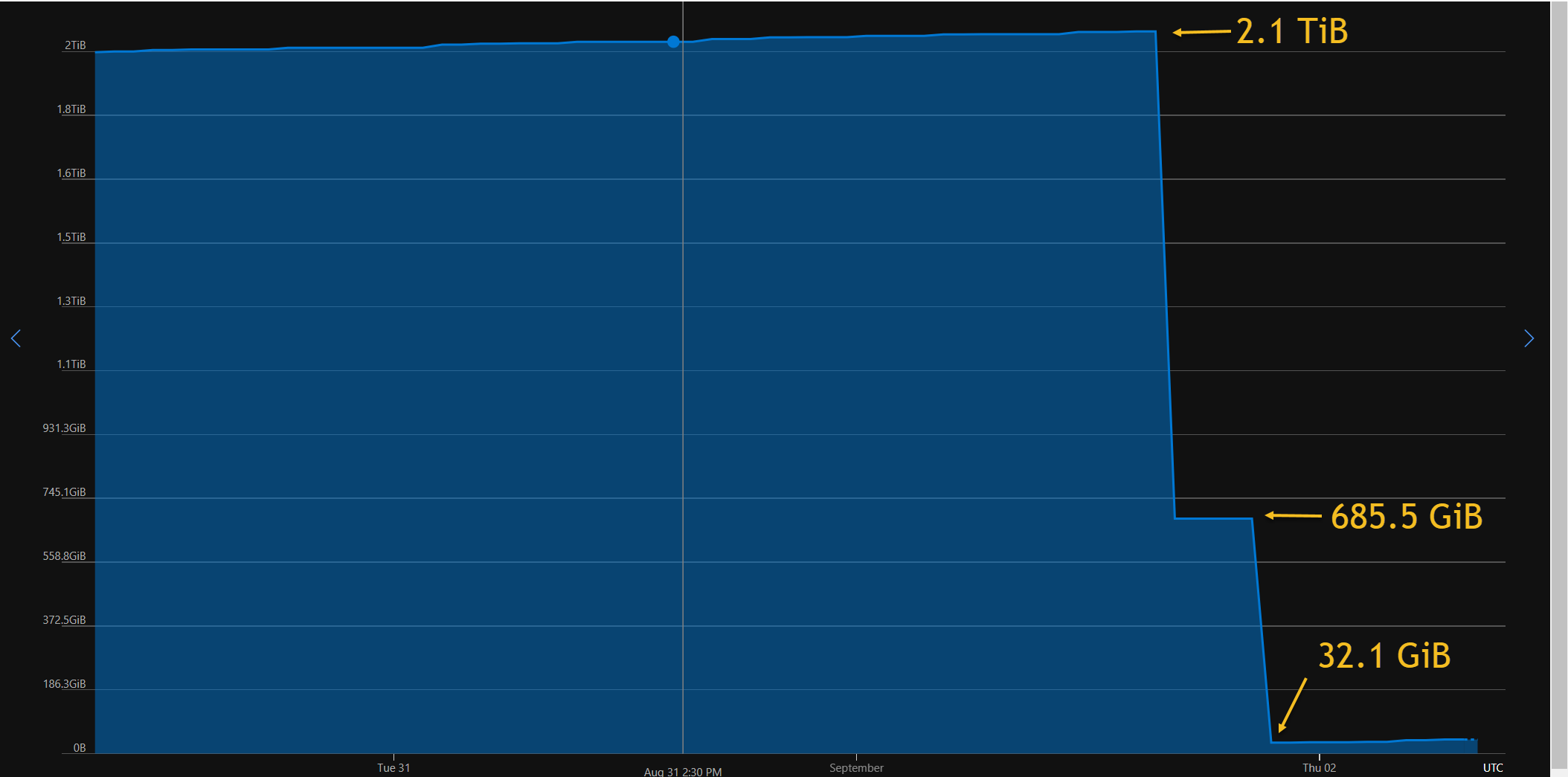

Storage usage also jumped down dramatically:

However, it keeps steadily growing at a very slow pace. Also I can’t understand why there is 32 GiB still in use - that’s still quite a lot. There is also no way to run this on schedule, at least I couldn’t find an API for it.

Em, excuse me! Have Android 📱 and use Databricks?

You might be interested in my totally free (and ad-free) Pocket Bricks . You can get it from Google Play too:

To contact me, send an email anytime or leave a comment below.