Apache Kafka vs Azure Event Hubs: which one to use?

Apache Kafka vs Microsoft Azure Event Hubs

If you are looking for a scalable, reliable, and fast way to stream data from your applications, you might have considered using Apache Kafka or Microsoft Azure Event Hubs. Both are popular platforms that offer similar features, such as partitioned logs, consumer groups, and offsets. But what are the main differences between them and how do you choose the best one for your use case?

Architecture

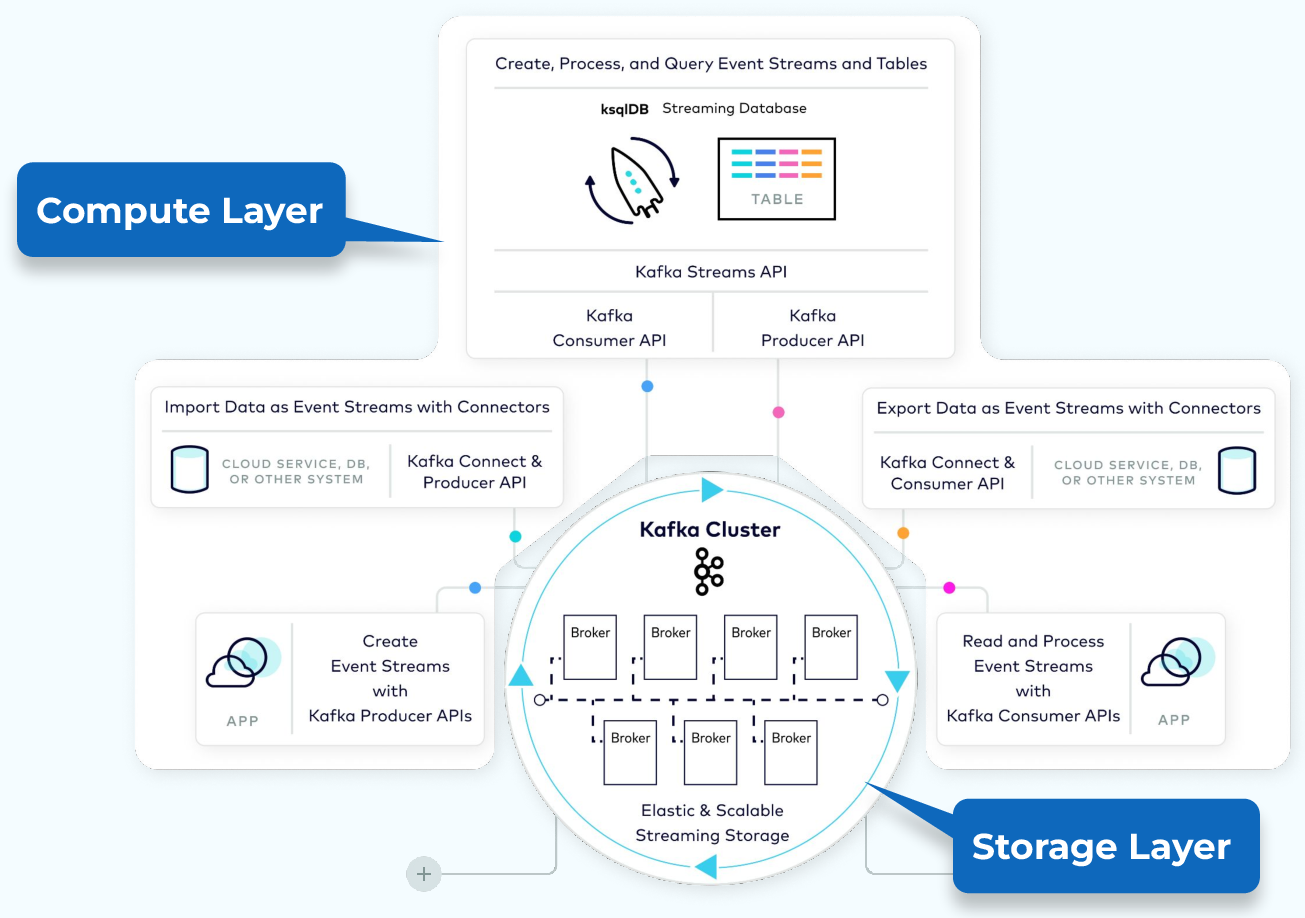

Apache Kafka

is a software that you typically need to install and operate on your own servers or on a cloud provider. It consists of a cluster of brokers that store and serve data in topics, which are divided into partitions. Each partition has a leader and one or more followers that replicate the data for fault tolerance. Clients can produce and consume data from the topics using various APIs and libraries.

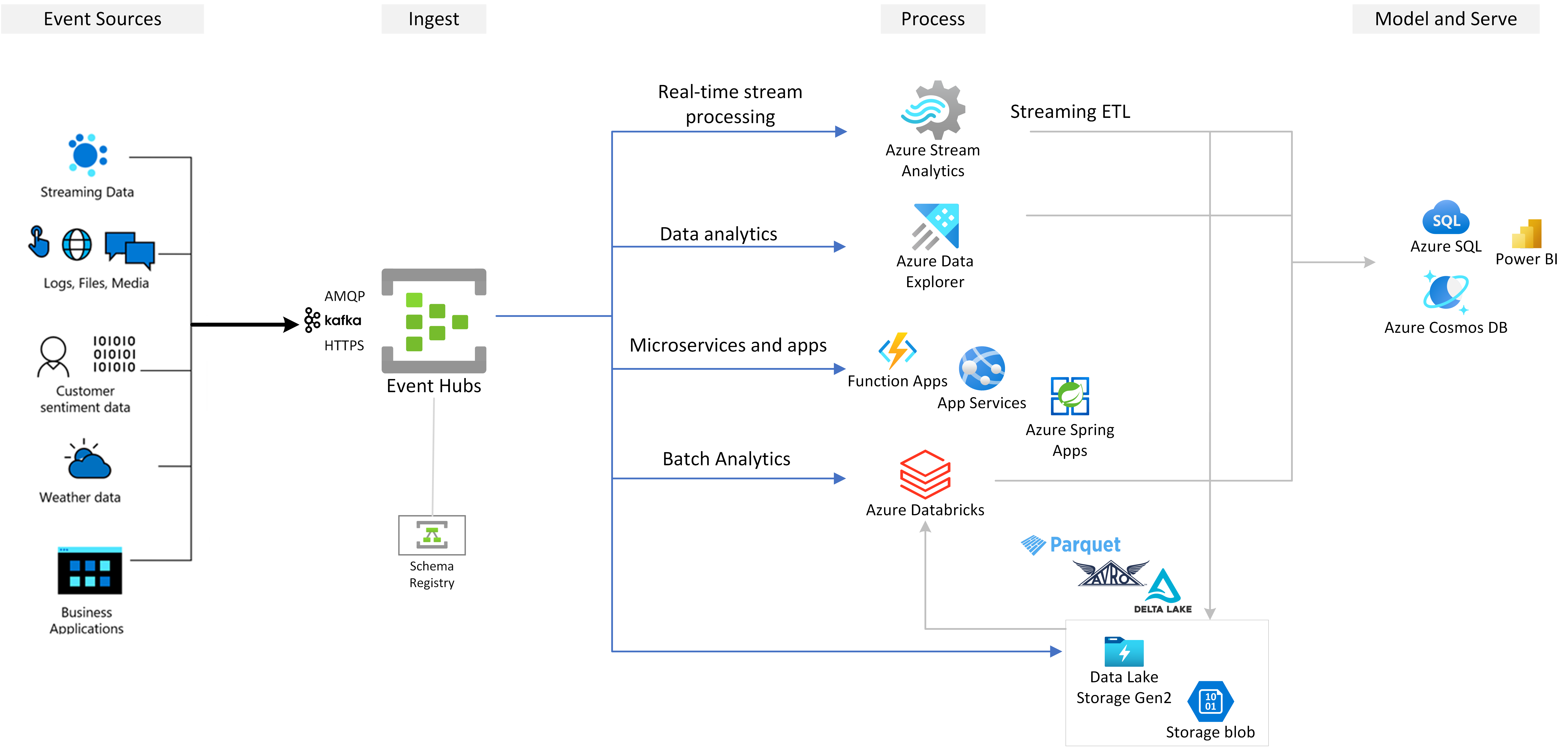

Azure Event Hubs

is a fully managed service in the Azure cloud that optionally provides an Apache Kafka endpoint on an event hub, in addition to the Event Hubs native API. An event hub is equivalent to a Kafka topic, and it also has partitions that store and distribute data. However, you don’t need to worry about setting up or configuring brokers, disks, or networks. You just create a namespace, which is an endpoint with a fully qualified domain name, and then create event hubs within that namespace. You can use the same Kafka protocol to connect to the event hub from your applications without any code changes, which prevents from a vendor lock-in.

Azure Event Hubs can scale automatically and has a single virtual IP address as the endpoint, while Kafka requires more configuration and firewall access for all brokers of a cluster.

In summary, when running on Microsoft Azure, or even when you are unsure whether you are going to migrate to another cloud, Azure Event Hubs will be a safe a best choice in terms of architecture.

Performance

Both Apache Kafka and Azure Event Hubs can handle high volumes of data with low latency and high throughput. However, there are some differences in how they scale and handle backpressure.

Apache Kafka scales by adding more brokers to the cluster and assigning partitions to them. The number of partitions per topic determines the maximum level of parallelism for producers and consumers. However, increasing the number of partitions also increases the overhead of replication and coordination among brokers. Moreover, Kafka does not have any built-in mechanism to handle backpressure, which is the situation when consumers cannot keep up with the rate of producers. This can lead to data loss or performance degradation if not handled properly by the application logic.

Azure Event Hubs scales by purchasing throughput units (TUs) or processing units (PUs) that determine the amount of data you can send and receive per second. Each TU or PU allows 1 MB/s ingress and 2 MB/s egress, up to a maximum of 1000 TUs or 20 PUs per namespace. You can also enable the Auto-Inflate feature for a standard tier namespace, which automatically scales up TUs when you reach the throughput limit. Azure Event Hubs also handles backpressure by using offsets and checkpoints to track the position of consumers in the partitions. If a consumer falls behind, it can catch up by reading from the offset it last checkpointed.

Throughput

The throughput of Apache Kafka depends on the performance of the brokers and the network. According to some benchmarks, Apache Kafka can achieve a throughput of about 2 million events per second with a 3-node cluster of r5.xlarge AWS instances.

According to some benchmarks, Azure Event Hubs Premium can achieve a throughput of about 1.8 million events per second with 100 PUs.

Latency

Apache Kafka

Latency is the measure of how long it takes for an event to be delivered from the producer to the consumer. It depends on various factors, such as the network latency, the batching settings, the compression settings, and the processing logic.

Latency depends on several configuration parameters, such as:

linger.ms: the maximum time to buffer events before sending them. A higher value can increase throughput but also latency.batch.size: the maximum size of a batch of events. A larger batch can improve compression and network efficiency, but also increase latency.compression.type: the compression algorithm to use. Compression can reduce the network bandwidth and disk usage, but also add some CPU overhead.acks: the number of acknowledgments required from brokers. A higher value can ensure higher durability, but also increase latency.

According to this benchmark, Apache Kafka can achieve a latency ranging from a few milliseconds to a few seconds, depending on these settings and the workload. The benchmark also shows how to measure the latency using different metrics, such as produce time, publish time, commit time, catch-up time, and end-to-end latency.

Azure Event Hubs

Here is a possible text using the keywords:

Azure Event Hubs is a scalable event processing service that can ingest and process large volumes of events and data with low latency and high reliability. To optimize the latency performance, Azure Event Hubs allows you to configure some parameters that affect how events are sent and received, such as:

sendBatch: The method to send a batch of events to an event hub. Batching events can reduce the number of network requests and improve throughput.maxBatchSize: The maximum size of a batch of events, in bytes. The default value is 1 MB. Larger batches can increase latency, while smaller batches can decrease throughput.maxWaitTime: The maximum time to wait for a batch to be full before sending it. The default value is null, which means no waiting time. Setting a wait time can increase the chances of filling up a batch, but also increase latency.enableIdempotentRetries: The flag to enable idempotent retries for sending events. Idempotent retries ensure that events are not duplicated in case of transient errors or network failures. Enabling this feature can improve reliability, but also increase latency and resource consumption.enableBatchedOperations: The flag to enable batching operations for receiving events. Batching operations can reduce the number of network requests and improve throughput for receivers.

Depending on these settings, Azure Event Hubs can achieve a latency ranging from tens of milliseconds to hundreds of milliseconds . You can monitor the latency metrics using Azure Monitor or Application Insights.

- Overview of features - Azure Event Hubs - Azure Event Hubs | Microsoft Learn

- High latency when sending events to Azure Event Hub - Stack Overflow

- Monitoring Azure Event Hubs data reference - Azure Event Hubs

Pricing

Apache Kafka is an open source software that you can download and use for free. However, you still need to pay for the infrastructure costs of running and maintaining it, such as servers, disks, networks, backups, monitoring, etc. Depending on your cloud provider, you might also pay for data transfer fees or load balancer fees. Alternatively, you can use a managed service like Confluent Cloud or Amazon MSK that offers Kafka as a service with different pricing plans based on usage.

Azure Event Hubs is a cloud service that charges you based on the number of TUs or PUs you use per hour, plus the number of events you send or receive per month. For example, in the US East region, one TU costs $0.028 per hour and one PU costs $0.21 per hour. Additionally, you pay $0.028 per million events for standard tier namespaces and $0.056 per million events for premium tier namespaces. You can also use the dedicated tier for Event Hubs if you need more than 20 PUs or 1000 TUs per namespace, which has a fixed monthly fee based on the number of clusters you provision.

Apache Kafka: Apache Kafka is an open-source stream processing platform. The cost of running Apache Kafka can vary widely depending on your specific use case. For small use cases, Confluent Cloud, a fully managed Kafka service, is very cheap, about $1 a month to produce, store, and consume a GB of data. However, as your usage scales and your requirements become more sophisticated, your cost will scale too.

For medium workloads, the cost of running Apache Kafka can be substantial. For instance, for 3 clusters, the pricing is above $100,000 a year. However, it’s important to note that Apache Kafka is able to handle a large number of I/Os (writes) using 3-4 cheap servers.

Azure Event Hubs: Azure Event Hubs is a fully-managed, real-time data ingestion service. The pricing for Azure Event Hubs depends on the tier and features you choose. For instance, the Basic tier costs $0.015 per hour per Throughput Unit, and the Standard tier costs $0.03 per hour per Throughput Unit. Ingress events cost $0.028 per million events for both tiers. There are also costs associated with additional features like Capture and extended retention.

Integration

Both Apache Kafka and Azure Event Hubs have a wide range of integration options with other platforms and services. For example, you can use Kafka Connect to stream data between Kafka and various sources and sinks, such as databases, file systems, cloud storage, etc. You can also use Kafka Streams or KSQL to process and transform data in real time using SQL-like queries. Moreover, you can use frameworks like Spark Streaming or Flink to perform complex analytics and machine learning on Kafka data.

Azure Event Hubs also supports streaming data to various destinations using Azure services like Stream Analytics, Functions, Logic Apps, etc. You can also use Azure Databricks or HDInsight to run Spark or Flink jobs on Event Hubs data. Furthermore, you can use Azure Synapse Analytics or Power BI to perform business intelligence and reporting on Event Hubs data.

Conclusion

Apache Kafka and Azure Event Hubs are both powerful platforms for streaming data, but they have different trade-offs and advantages. Apache Kafka gives you more control and flexibility over your data pipeline, but it also requires more operational overhead and expertise. And considering that people is the most expensive resource, it might worth adding this to price/performance calculations.

Azure Event Hubs provides a fully managed and scalable service that is compatible with the Kafka protocol, but it also has some limitations and differences from the original Kafka implementation, although it will be hard to notice those on 99.9% of the projects. Also, those limitations are only come into place when emulating Kafka, and on it’s own Event Hubs is an extremely powerful product, arguably more powerful than Kafka. Ultimately, the best choice depends on your specific requirements, preferences, and budget.

To contact me, send an email anytime or leave a comment below.