Spark Standalone Server Hosted in Ubuntu Linux

First, download Spark and extract somewhere. In my case it’s in /opt/spark on a server:

alg@mc:/opt/spark$ ls

bin data jars LICENSE logs python README.md sbin yarn

conf examples kubernetes licenses NOTICE R RELEASE work

Now, create a user called spark for security reasons

useradd -m spark

Use -m to create home folder as Spark requires it!

Now I will create two systemd services - spark master and worker.

Master Service

sudo vi /etc/systemd/system/spark-master.service:

[Unit]

Description=Apache Spark Master

Wants=network-online.target

After=network-online.target

[Service]

User=spark

Group=spark

Type=forking

SuccessExitStatus=143

WorkingDirectory=/opt/spark/sbin

ExecStart=/opt/spark/sbin/start-master.sh

ExecStop=/opt/spark/sbin/stop-master.sh

[Install]

WantedBy=multi-user.target

Worker Service

sudo vi /etc/systemd/system/spark-worker.service:

[Unit]

Description=Apache Spark Worker

Wants=network-online.target

After=network-online.target

[Service]

User=spark

Group=spark

Type=forking

SuccessExitStatus=143

WorkingDirectory=/opt/spark/sbin

ExecStart=/opt/spark/sbin/start-worker.sh spark://192.168.1.85:7077

ExecStop=/opt/spark/sbin/stop-worker.sh

[Install]

WantedBy=multi-user.target

Note that start-worker script requires address of Spark master. In my case it’s 192.168.1.85 (local IP address). Spark Master is configured to run on that address by vi /opt/spark/conf/spark-env.sh which looks like this:

export SPARK_MASTER_HOST=192.168.1.85

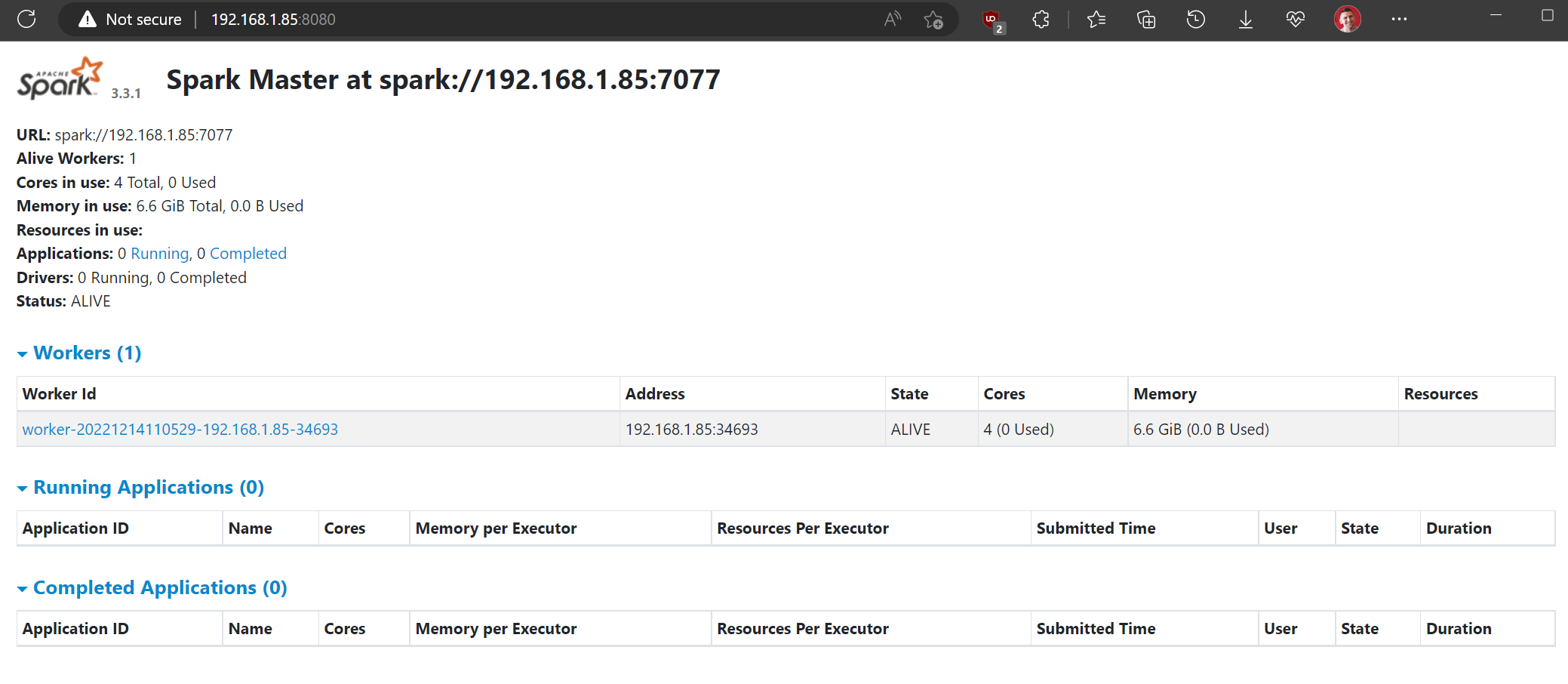

Test

To test, register services by executing systemctl daemon-reload and starting both services:

systemctl start spark-mastersystemctl start spark-worker

Check for errors if any issues with systemctl status spark-master. If everything is OK it should look like:

Autostart

Apparently, if you need Spark to autostart on server reboot, just enable two services:

systemctl enable spark-mastersystemctl enable spark-worker

Now reboot the server and Spark should still be up and running.

To contact me, send an email anytime or leave a comment below.