Create Apache Spark DataFrame in memory

Let’s say you have in-memory list and you want to create a DataFrame from it:

| id | subject |

|---|---|

| 1 | Aloneguid |

| 2 | Blogging |

Using PySpark

from pyspark.sql import SparkSession

spark = (SparkSession

.builder

.master("local[1]")

.getOrCreate())

df = spark.createDataFrame([(1, "Aloneguid"), (2, "Blogging")], "id int, subject string")

df.show()

+---+---------+

| id| subject|

+---+---------+

| 1|Aloneguid|

| 2| Blogging|

+---+---------+

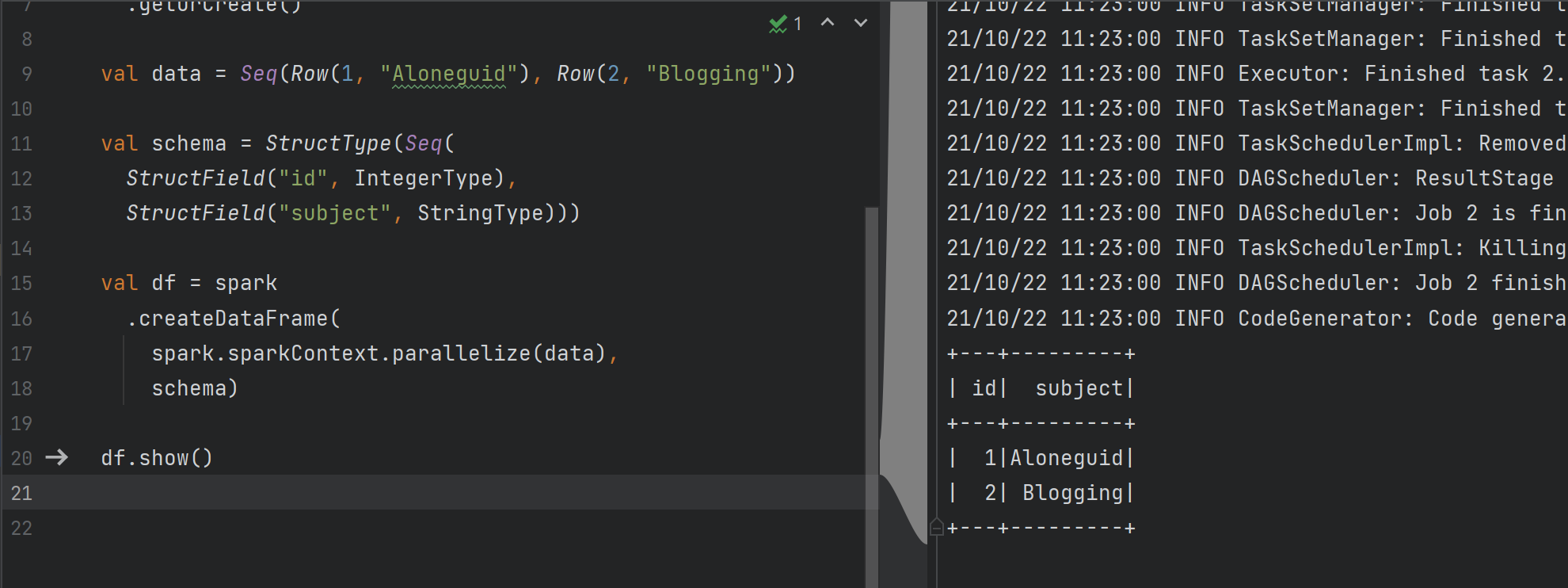

Using Scala

import org.apache.spark.sql.{Row, SparkSession}

import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType}

val spark = SparkSession

.builder()

.master("local[*]")

.getOrCreate()

val data = Seq(Row(1, "Aloneguid"), Row(2, "Blogging"))

val schema = StructType(Seq(

StructField("id", IntegerType),

StructField("subject", StringType)))

val df = spark

.createDataFrame(

spark.sparkContext.parallelize(data),

schema)

df.show()

To contact me, send an email anytime or leave a comment below.